使用kubeSphere管理你的k8s集群

KubeSphere 愿景是打造一个以 Kubernetes 为内核的云原生分布式操作系统,它的架构可以非常方便地使第三方应用与云原生生态组件进行即插即用(plug-and-play)的集成,支持云原生应用在多云与多集群的统一分发和运维管理。

本文地址:https://blog.focc.cc/archives/134

本文是在现有的K8s集群之上基于官方文档搭建的.

文章内所有代码块均可以双击头部全屏观看~

| 服务 | 版本 |

|---|---|

| K8s | v1.18.0 |

| kubeSphere | v3.1.0 |

1.安装准备

1.1 检查k8s集群的版本

如需在 Kubernetes 上安装 KubeSphere v3.1.0,您的 Kubernetes 版本必须为:v1.17.x,v1.18.x,v1.19.x 或 v1.20.x。

可以看到 GitVersion:"v1.18.0" 我的满足了版本要求

[root@k8smaster ~]# kubectl version

Client Version: version.Info{Major:"1", Minor:"18", GitVersion:"v1.18.0", GitCommit:"9e991415386e4cf155a24b1da15becaa390438d8", GitTreeState:"clean", BuildDate:"2020-03-25T14:58:59Z", GoVersion:"go1.13.8", Compiler:"gc", Platform:"linux/amd64"}

Server Version: version.Info{Major:"1", Minor:"18", GitVersion:"v1.18.0", GitCommit:"9e991415386e4cf155a24b1da15becaa390438d8", GitTreeState:"clean", BuildDate:"2020-03-25T14:50:46Z", GoVersion:"go1.13.8", Compiler:"gc", Platform:"linux/amd64"}

1.2 检查集群的可用资源

可用 CPU > 1 核;内存 > 2 G

看起来不太够啊~我的物理机只有16G啊喂,硬着头皮看看能起来不能吧 哈哈哈

[root@k8smaster ~]# free -g

total used free shared buff/cache available

Mem: 2 0 0 0 1 1

Swap: 0 0 0

1.3 检查集群中默认StorageClass

Kubernetes 集群已配置默认 StorageClass(请使用 kubectl get sc 进行确认)。

我的没有~那我们就基于nfs创建一个吧

创建StorageClass

StorageClass 为管理员提供了描述存储 "类" 的方法。 不同的类型可能会映射到不同的服务质量等级或备份策略,或是由集群管理员制定的任意策略。 Kubernetes 本身并不清楚各种类代表的什么。这个类的概念在其他存储系统中有时被称为 "配置文件"。

我们先搞一台虚拟机安装NFS来当做数据存储的服务器

我这里在装一台虚拟机(192.168.182.131)来作为NFS服务器

关闭防火墙

systemctl stop firewalld

systemctl disable firewalld

设置hostName

hostnamectl set-hostname k8snfs

下载nfs

yum install -y nfs-utils

设置数据挂载路径

vi /etc/exports

/data/nfs *{rw,no_root_squash}

挂载路径一定要创建出来

cd /

mkdir -p data/nfs

在k8s集群的node节点安装并开启nfs

yum install -y nfs-utils

systemctl start nfs

回到我们的nfs服务器启动nfs服务

systemctl start nfs

查看一下启动状态

ps -ef |grep nfs

在k8s主节点上下载yml文件

wget https://raw.githubusercontent.com/kubernetes-retired/external-storage/master/nfs-client/deploy/deployment.yaml

wget https://raw.githubusercontent.com/kubernetes-retired/external-storage/master/nfs-client/deploy/rbac.yaml

wget https://raw.githubusercontent.com/kubernetes-retired/external-storage/master/nfs-client/deploy/class.yaml

修改deployment.yaml配置文件中的nfs服务器和路径修改为自己nfs服务器和路径

...

env:

- name: PROVISIONER_NAME

value: fuseim.pri/ifs

- name: NFS_SERVER

value: 192.168.182.131

- name: NFS_PATH

value: /data/nfs

volumes:

- name: nfs-client-root

nfs:

server: 192.168.182.131

path: /data/nfs

然后依次创建它们并查看状态

[root@k8smaster storageclass]# kubectl apply -f deployment.yaml

deployment.apps/nfs-client-provisioner created

[root@k8smaster storageclass]# kubectl apply -f rbac.yaml

serviceaccount/nfs-client-provisioner created

clusterrole.rbac.authorization.k8s.io/nfs-client-provisioner-runner created

clusterrolebinding.rbac.authorization.k8s.io/run-nfs-client-provisioner created

role.rbac.authorization.k8s.io/leader-locking-nfs-client-provisioner created

rolebinding.rbac.authorization.k8s.io/leader-locking-nfs-client-provisioner created

[root@k8smaster storageclass]# kubectl apply -f class.yaml

[root@k8smaster storageclass]# kubectl get po

NAME READY STATUS RESTARTS AGE

nfs-client-provisioner-65c77c7bf9-54rdp 1/1 Running 0 24s

nginx-f54648c68-dwc5n 1/1 Running 2 4d23h

[root@k8smaster storageclass]# kubectl get sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

managed-nfs-storage fuseim.pri/ifs Delete Immediate false 16m

设置默认的StroageClass

kubectl patch storageclass managed-nfs-storage -p '{"metadata": {"annotations":{"storageclass.kubernetes.io/is-default-class":"true"}}}'

[root@k8smaster storageclass]# kubectl get sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

managed-nfs-storage (default) fuseim.pri/ifs Delete Immediate false 21m

准备好以上工作,我们就快速部署吧

2. 部署 KubeSphere

确保现有的 Kubernetes 集群满足所有要求之后,您可以使用 kubectl 以默认最小安装包来安装 KubeSphere。

2.1 执行命令开始安装

我们执行完两个kubectl apply命令之后 可以看到创建了namsespac、serviceaccount、rbac、deploymet、ClusterConfiguration

[root@k8smaster kubesphere]# kubectl apply -f https://github.com/kubesphere/ks-installer/releases/download/v3.1.0/kubesphere-installer.yaml

customresourcedefinition.apiextensions.k8s.io/clusterconfigurations.installer.kubesphere.io created

namespace/kubesphere-system created

serviceaccount/ks-installer created

clusterrole.rbac.authorization.k8s.io/ks-installer created

clusterrolebinding.rbac.authorization.k8s.io/ks-installer created

deployment.apps/ks-installer created

[root@k8smaster kubesphere]# kubectl apply -f https://github.com/kubesphere/ks-installer/releases/download/v3.1.0/cluster-configuration.yaml

clusterconfiguration.installer.kubesphere.io/ks-installer created

2.2 检查安装日志

看起来有好多pod没起来啊~~

用命令看了一下 system的全起来了,contro两个没起来,monitor挺多没起来 不是最小安装吗?怎么把monitor也给我装上了。。

[root@k8smaster kubesphere]# kubectl get pod --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

default nfs-client-provisioner-65c77c7bf9-54rdp 0/1 Error 0 47h

default nginx-f54648c68-dwc5n 1/1 Running 3 6d22h

ingress-nginx nginx-ingress-controller-766fb9f77-85jqd 1/1 Running 4 6d22h

kube-system coredns-7ff77c879f-6wzfs 1/1 Running 8 13d

kube-system coredns-7ff77c879f-t4kmb 1/1 Running 8 13d

kube-system etcd-k8smaster 1/1 Running 8 13d

kube-system kube-apiserver-k8smaster 1/1 Running 8 13d

kube-system kube-controller-manager-k8smaster 1/1 Running 14 13d

kube-system kube-flannel-ds-cq5hv 1/1 Running 10 13d

kube-system kube-flannel-ds-rwv77 1/1 Running 12 13d

kube-system kube-flannel-ds-v4w9v 1/1 Running 10 13d

kube-system kube-proxy-2rn69 1/1 Running 8 13d

kube-system kube-proxy-95g8s 1/1 Running 8 13d

kube-system kube-proxy-f6ndn 1/1 Running 8 13d

kube-system kube-scheduler-k8smaster 1/1 Running 14 13d

kube-system snapshot-controller-0 1/1 Running 0 9m45s

kubesphere-controls-system default-http-backend-857d7b6856-2rlct 0/1 ContainerCreating 0 9m25s

kubesphere-controls-system kubectl-admin-db9fc54f5-6jvpq 0/1 ContainerCreating 0 5m16s

kubesphere-monitoring-system kube-state-metrics-7f65879cfd-444g7 3/3 Running 0 7m17s

kubesphere-monitoring-system node-exporter-4m7fm 0/2 ContainerCreating 0 7m18s

kubesphere-monitoring-system node-exporter-lqjc7 2/2 Running 0 7m18s

kubesphere-monitoring-system node-exporter-sqjwz 2/2 Running 0 7m19s

kubesphere-monitoring-system notification-manager-operator-7877c6574f-gcrs8 0/2 ContainerCreating 0 6m43s

kubesphere-monitoring-system prometheus-operator-7d7684fc68-8qrhh 0/2 ContainerCreating 0 7m21s

kubesphere-system ks-apiserver-7665985458-7t9r5 1/1 Running 0 6m14s

kubesphere-system ks-console-57f7557465-8wrlm 1/1 Running 0 9m11s

kubesphere-system ks-controller-manager-6d5f69c4b-tfrpk 1/1 Running 0 6m14s

kubesphere-system ks-installer-7fd79c664-tljl8 1/1 Running 0 11m

2.3 起不来怎么办?

重启。。无果

[root@k8smaster ~]# kubectl get pod --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

default nfs-client-provisioner-65c77c7bf9-54rdp 0/1 Error 0 47h

default nginx-f54648c68-dwc5n 1/1 Running 3 6d22h

ingress-nginx nginx-ingress-controller-766fb9f77-85jqd 1/1 Running 4 6d23h

kube-system coredns-7ff77c879f-6wzfs 1/1 Running 9 13d

kube-system coredns-7ff77c879f-t4kmb 1/1 Running 9 13d

kube-system etcd-k8smaster 1/1 Running 9 13d

kube-system kube-apiserver-k8smaster 1/1 Running 9 13d

kube-system kube-controller-manager-k8smaster 1/1 Running 15 13d

kube-system kube-flannel-ds-cq5hv 1/1 Running 10 13d

kube-system kube-flannel-ds-rwv77 1/1 Running 14 13d

kube-system kube-flannel-ds-v4w9v 1/1 Running 10 13d

kube-system kube-proxy-2rn69 1/1 Running 9 13d

kube-system kube-proxy-95g8s 1/1 Running 8 13d

kube-system kube-proxy-f6ndn 1/1 Running 8 13d

kube-system kube-scheduler-k8smaster 1/1 Running 15 13d

kube-system snapshot-controller-0 1/1 Running 0 22m

kubesphere-controls-system default-http-backend-857d7b6856-2rlct 0/1 ContainerCreating 0 22m

kubesphere-controls-system kubectl-admin-db9fc54f5-6jvpq 0/1 ContainerCreating 0 17m

kubesphere-monitoring-system kube-state-metrics-7f65879cfd-444g7 3/3 Running 0 19m

kubesphere-monitoring-system node-exporter-4m7fm 0/2 ContainerCreating 0 19m

kubesphere-monitoring-system node-exporter-lqjc7 2/2 Running 0 19m

kubesphere-monitoring-system node-exporter-sqjwz 2/2 Running 2 19m

kubesphere-monitoring-system notification-manager-operator-7877c6574f-gcrs8 0/2 ContainerCreating 0 19m

kubesphere-monitoring-system prometheus-operator-7d7684fc68-8qrhh 0/2 ContainerCreating 0 19m

kubesphere-system ks-apiserver-7665985458-7t9r5 1/1 Running 1 18m

kubesphere-system ks-console-57f7557465-8wrlm 1/1 Running 1 21m

kubesphere-system ks-controller-manager-6d5f69c4b-tfrpk 1/1 Running 1 18m

kubesphere-system ks-installer-7fd79c664-tljl8 1/1 Running 0 24m

先看nfs为什么起不来?

看样子是我们的nfs服务器拒绝连接了

[root@k8smaster ~]# kubectl describe pod nfs-client-provisioner-65c77c7bf9-54rdp

...

...

Mounting command: systemd-run

Mounting arguments: --description=Kubernetes transient mount for /var/lib/kubelet/pods/daf06a2a-7f31-49e6-8d46-3ed82797b4ee/volumes/kubernetes.io~nfs/nfs-client-root --scope -- mount -t nfs 192.168.182.131:/data/nfs /var/lib/kubelet/pods/daf06a2a-7f31-49e6-8d46-3ed82797b4ee/volumes/kubernetes.io~nfs/nfs-client-root

Output: Running scope as unit run-31568.scope.

mount.nfs: Connection refused

在我们的nfs服务器上查看一下nfs启动的状态

好家伙,没有启动?哦好像上次部署之后没有设置开启自启动?

[root@k8snfs ~]# ps -ef |grep nfs

avahi 6450 1 0 08:35 ? 00:00:00 avahi-daemon: running [k8snfs.local]

root 16756 15865 0 08:47 pts/0 00:00:00 grep --color=auto nfs

启动nfs服务器查看状态

[root@k8snfs ~]# systemctl start nfs

[root@k8snfs ~]# ps -ef |grep nfs

avahi 6450 1 0 08:35 ? 00:00:00 avahi-daemon: running [k8snfs.local]

root 16863 2 0 08:49 ? 00:00:00 [nfsd4_callbacks]

root 16867 2 0 08:49 ? 00:00:00 [nfsd]

root 16868 2 0 08:49 ? 00:00:00 [nfsd]

root 16869 2 0 08:49 ? 00:00:00 [nfsd]

root 16870 2 0 08:49 ? 00:00:00 [nfsd]

root 16871 2 0 08:49 ? 00:00:00 [nfsd]

root 16872 2 0 08:49 ? 00:00:00 [nfsd]

root 16873 2 0 08:49 ? 00:00:00 [nfsd]

root 16874 2 0 08:49 ? 00:00:00 [nfsd]

root 16887 15865 0 08:49 pts/0 00:00:00 grep --color=auto nfs

设置开机启动

[root@k8snfs ~]# systemctl enable nfs

Created symlink from /etc/systemd/system/multi-user.target.wants/nfs-server.service to /usr/lib/systemd/system/nfs-server.service.

删除刚刚的nfspod 让它自己启动

好家伙,还是起不来。

[root@k8smaster ~]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nfs-client-provisioner-65c77c7bf9-dwnvc 0/1 ContainerCreating 0 3m35s <none> k8snode2 <none> <none>

nginx-f54648c68-dwc5n 1/1 Running 3 6d22h 10.244.2.41 k8snode2 <none> <none>

查看具体原因

拉镜像?去node节点看看

[root@k8smaster ~]# kubectl describe pod nfs-client-provisioner-65c77c7bf9-dwnvc

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 3m20s default-scheduler Successfully assigned default/nfs-client-provisioner-65c77c7bf9-dwnvc to k8snode2

Normal Pulling 3m19s kubelet, k8snode2 Pulling image "quay.io/external_storage/nfs-client-provisioner:latest"

好家伙,卡着不动了啊。。等把

[root@k8snode2 ~]# docker pull quay.io/external_storage/nfs-client-provisioner:latest

latest: Pulling from external_storage/nfs-client-provisioner

a073c86ecf9e: Pull complete

d9d714ee28a7: Pull complete

36dfde95678a: Downloading 2.668MB

看了下node1的镜像是有的,这次调度到node2了。需要重新拉

[root@k8snode1 ~]# docker images

...

...

quay.io/external_storage/nfs-client-provisioner latest 16d2f904b0d8 2 years ago 45.5MB

不等了,让它自己下镜像吧,我们把它删掉,让它调度到node1.直接就起来了

[root@k8smaster ~]# kubectl delete pod nfs-client-provisioner-65c77c7bf9-dwnvc

pod "nfs-client-provisioner-65c77c7bf9-dwnvc" deleted

[root@k8smaster ~]# kubectl get po

NAME READY STATUS RESTARTS AGE

nfs-client-provisioner-65c77c7bf9-8jvgf 1/1 Running 0 11s

nginx-f54648c68-dwc5n 1/1 Running 3 6d22h

查看其它几个pod的错误 好像都是网络问题,一直在pull镜像

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 36m default-scheduler Successfully assigned kubesphere-controls-system/default-http-backend-857d7b6856-2rlct to k8snode2

Normal Pulling 36m kubelet, k8snode2 Pulling image "mirrorgooglecontainers/defaultbackend-amd64:1.4"

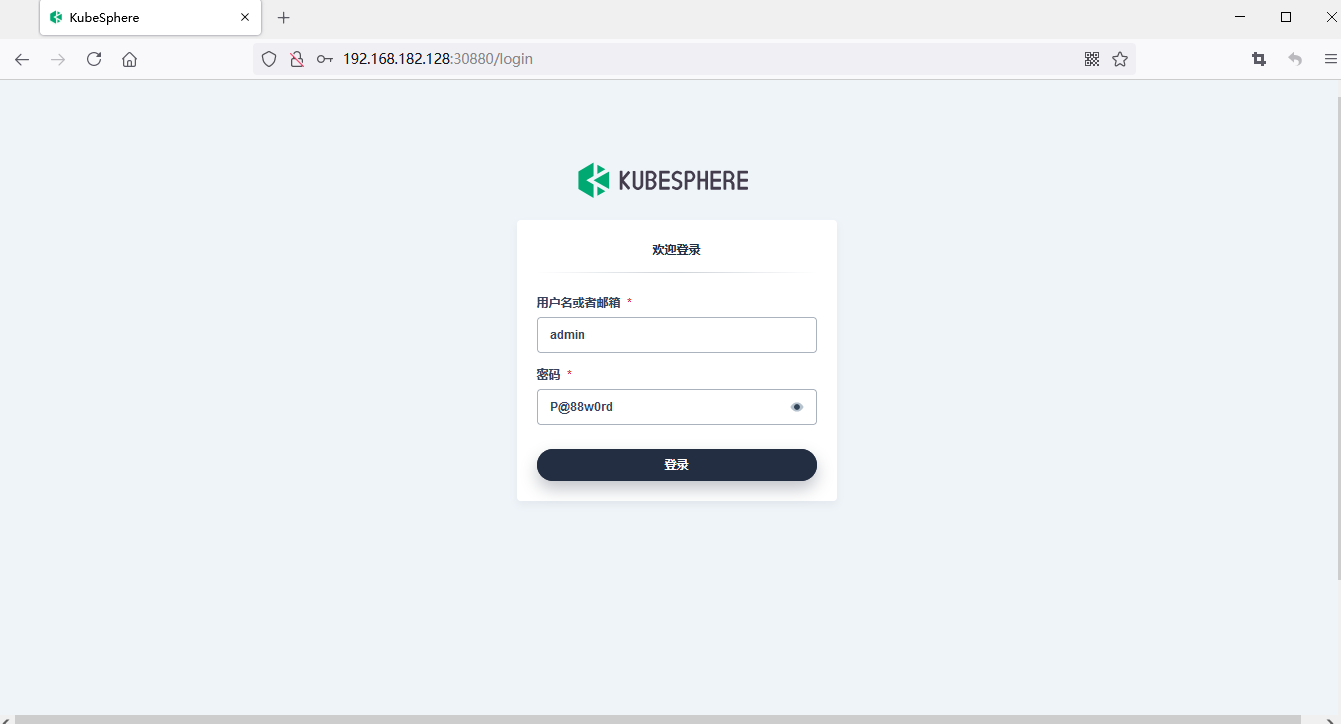

2.4 登录kubeSphere

起不来的大多都是在拉取镜像,网络不给力,给它们点时间

我们先登录页面看看

使用集群中任一IP:30880端口访问

[root@k8smaster ~]# kubectl get svc/ks-console -n kubesphere-system

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

ks-console NodePort 10.100.99.46 <none> 80:30880/TCP 40m

使用默认账号密码登录 admin/P@88w0rd

登录之后可以看到平台介绍页面了~

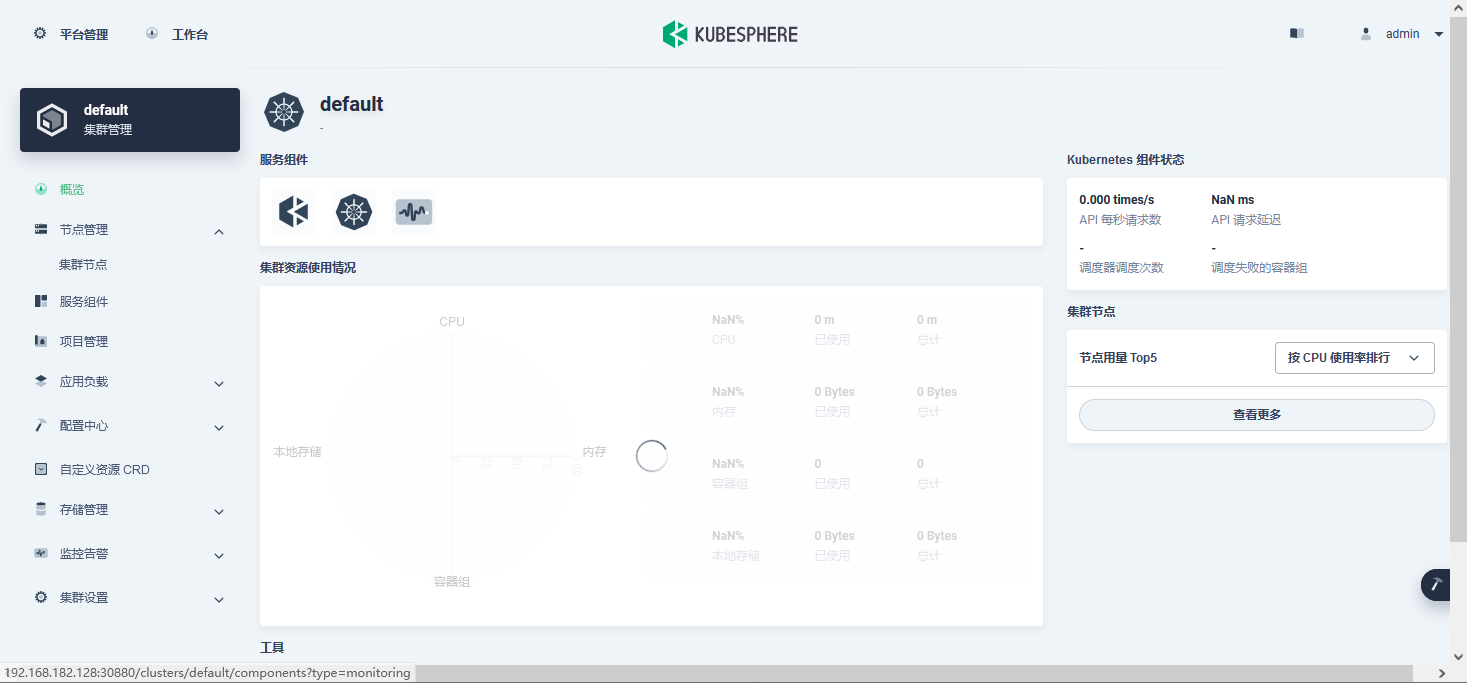

3. 管理我们的集群

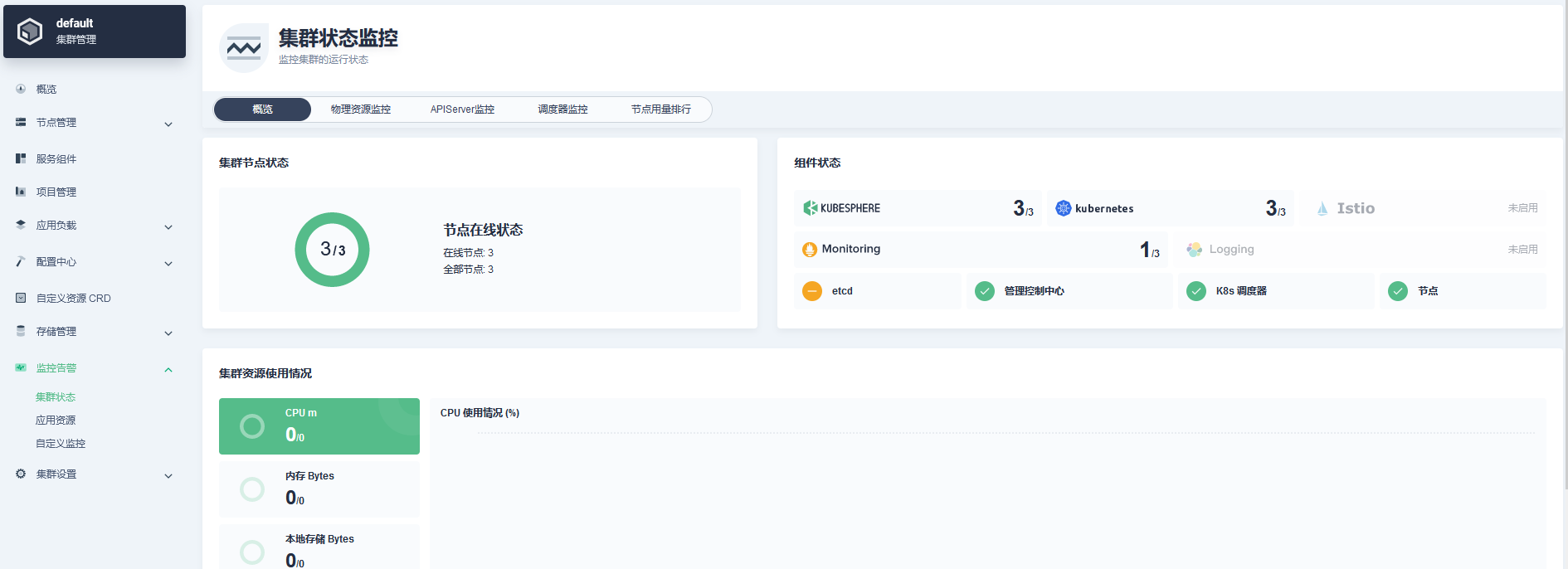

点击左上角的平台管理,选择集群管理。

集群的使用情况看不到,因为monitor环境的pod没起来,我去看了后台,还是在pull镜像,裂开。。

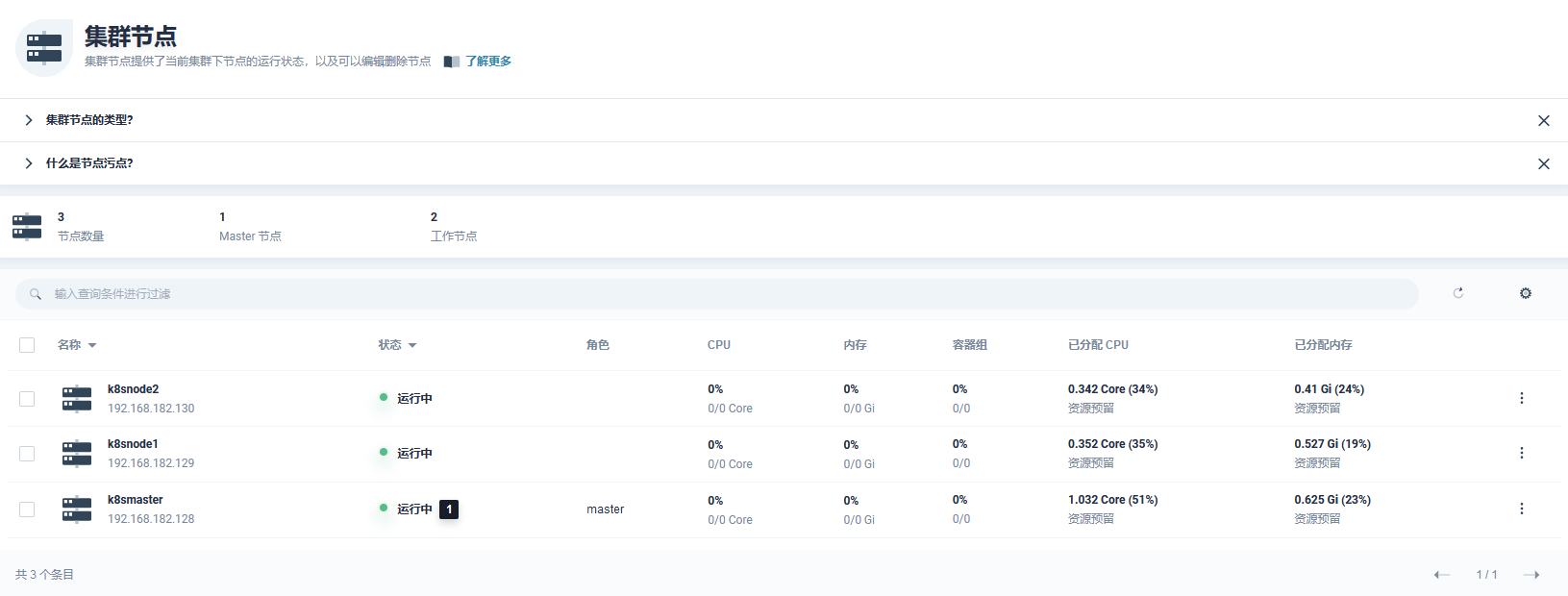

3.1 集群节点管理

可以看到我们的集群master节点和工作node节点 可以直接启动停止

3.2 服务组件

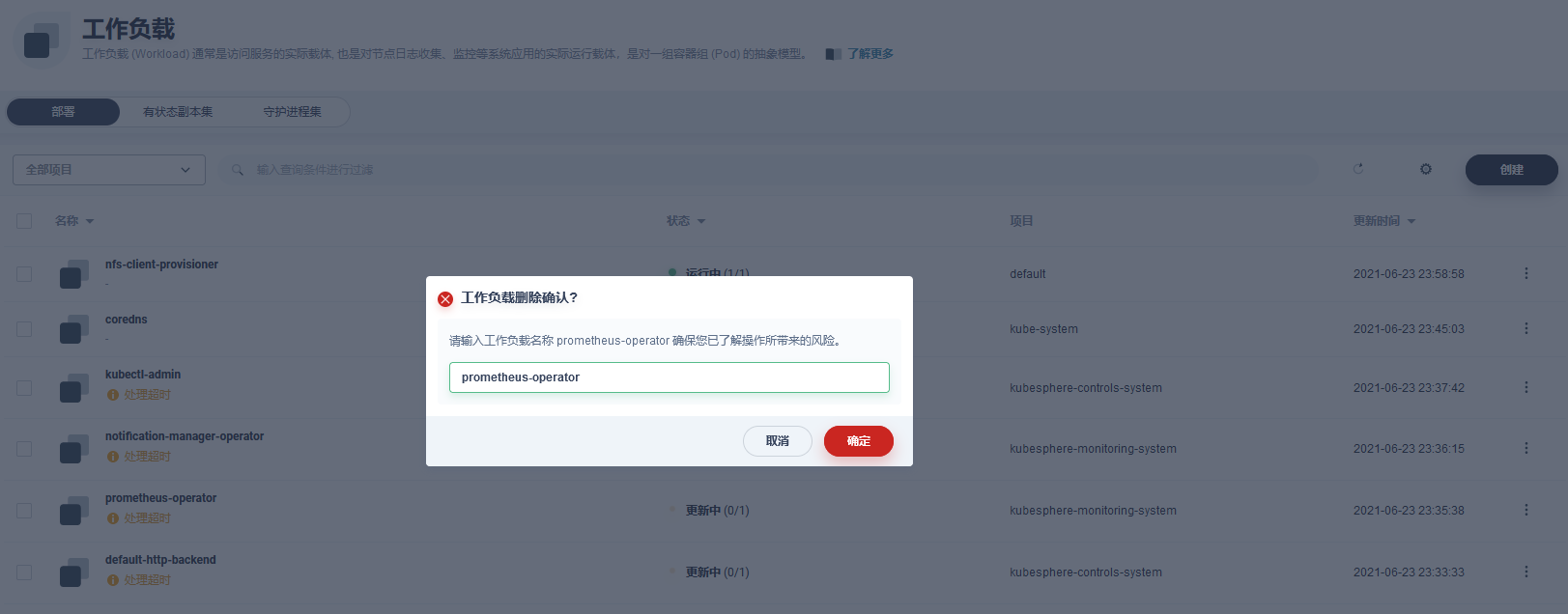

哈哈,这些监控组件都没起来,待会给他们删除了,因为Prometheus我不打算通过kubeSphere部署。这个模块也没必要。

可以看到我们的k8s集群组件还是很健康的

3.3 应用负载

在这里我们能够很方便的对集群中的所有资源操作

先把Prometheus删掉!

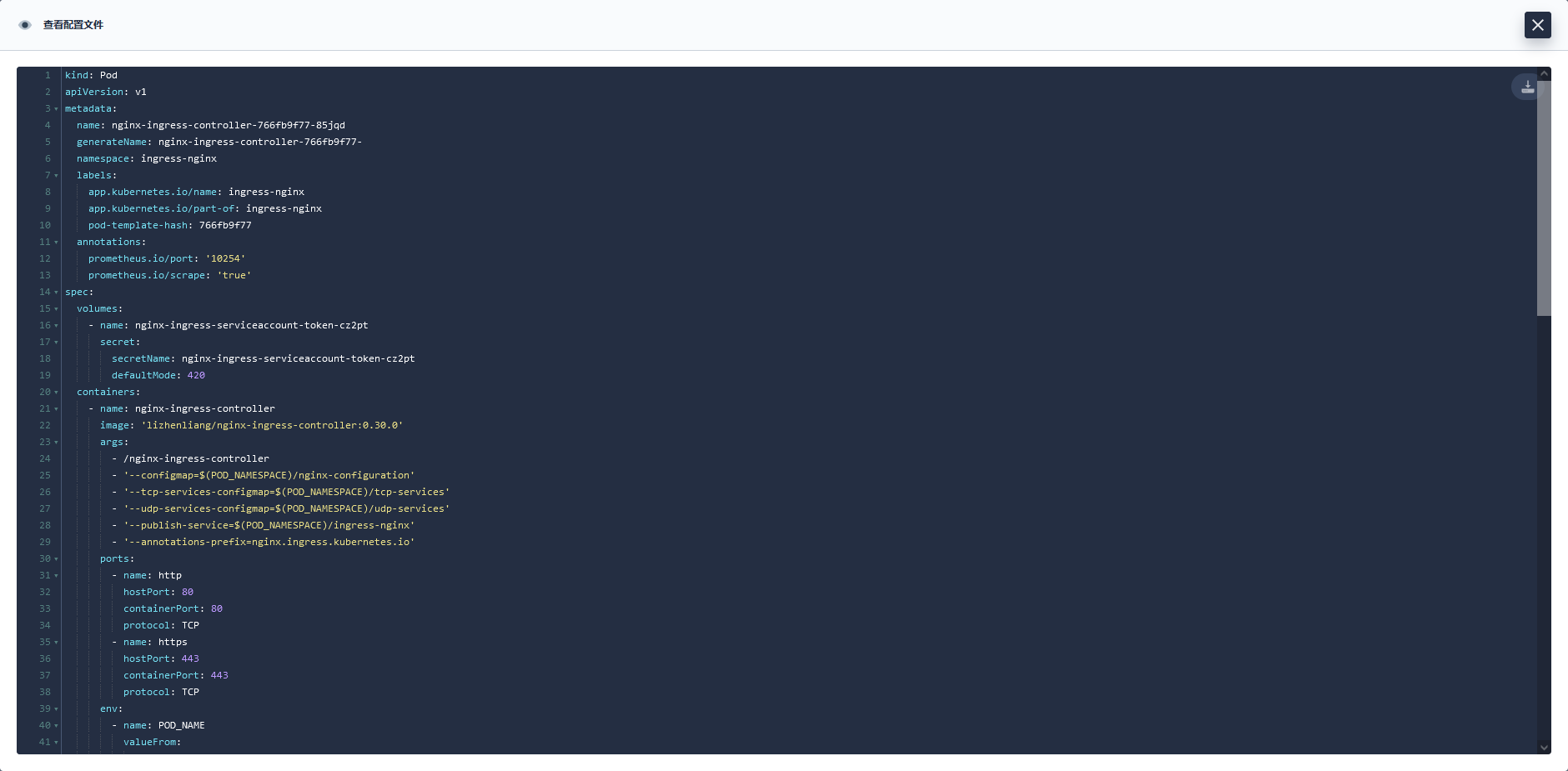

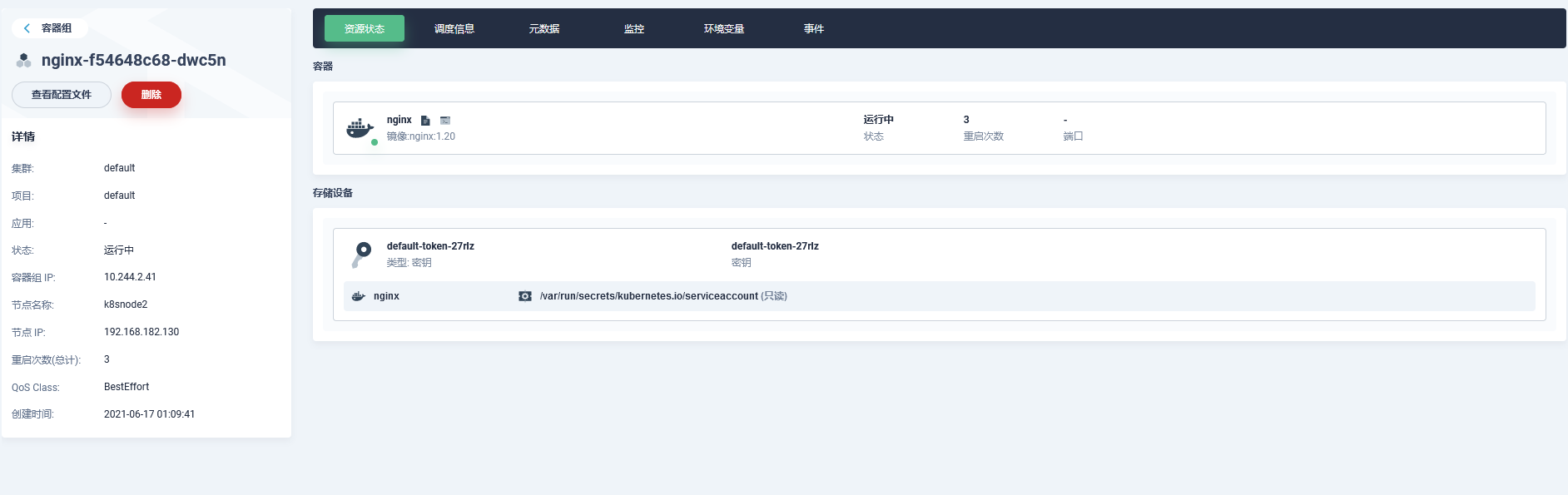

可以看到我们部署的nginx应用

能够很方便查看配置等内容

可以创建pod 和 svc

3.4 监控模块

可以尝试下 kubesphere的监控模块~ 它应该也是基于Prometheus来做的

4. 平台设置

在这里还可以设置平台集成推送服务,包括邮件、钉钉、企业微信、没有lark(/🤭偷笑)

5. 更多~

kubesphere对权限这块也是做了支持~

具体可以详细去看看 我还要再探索探索,告别黑窗口~😰

本文是基于在现有的k8s集群之上搭建KubeSphere的,跟着官方的文档一步一步做,出错了就解决错误,目前来看,确实挺好用的,有望投入到生产使用~

本篇文章就到这里了。kubeSphere最厉害的地方应该是非常方便地使第三方应用与云原生生态组件进行即插即用(plug-and-play)的集成 ,这个后边再去探索吧~

如果没有搭建k8s的话可以跟着我上篇文章快速搭建一个k8s集群~

再撘一套Kubernetes集群

参考资料

| Name | Url |

|---|---|

| StroageClass | https://kubernetes.io/zh/docs/concepts/storage/storage-classes/ |

| k8s中使用基于nfs的storageclass | https://blog.csdn.net/dayi_123/article/details/107946953 |

| 改变默认 StorageClass | https://kubernetes.io/zh/docs/tasks/administer-cluster/change-default-storage-class/ |

| 在 Kubernetes 上安装 KubeSphere | https://kubesphere.com.cn/docs/installing-on-kubernetes/introduction/overview/ |